In order to understand Next-Gen File Integrity Monitoring (NGFIM), we must take a quick peek at the history and origin of File Integrity Monitoring (FIM). FIM has remained unchanged by way of a lack of innovation and the inability of the industry to alter its perspective of monitoring FIM from a state of good versus one that is bad (think anti-virus). If a quick history lesson doesn’t interest you, please jump to the section: Next-Gen FIM…What Does It Look Like?

File Integrity Monitoring - An Oxymoron by Current Industry Definition

Integrity is a long-standing requirement and one of the critical pillars of an effective security strategy. It is often associated with the SANS CIA Triad and highlighted in numerous best practice frameworks like NIST 800-53, PCI-DSS, Sarbanes-Oxley (SOX), HIPPA, NERC CIP, FISMA, CIS Controls, ISO 27001, and many others. FIM made a massive appearance in the early 2000s, not from a security perspective of intrusion detection but as a compliance mandate. Due to the complexity of deploying and operating early FIM solutions, it became a compliance “check-the-box” mentality and shelfware. The cost(s) were tremendously outweighing the investment value and ROI.

Initial FIM solutions were essentially built on the idea of establishing a baseline by cryptographically hashing a file and then detecting change when that hash value was different from the initial expected state. FIM soon became associated with terms like "noisy", "false positives", "unmanageable", and "not scalable". While all these were true then, the industry perception remains the same.

The concept of detecting a change to a baseline has no bearing on whether you have integrity within your infrastructure or not, it simply tells you that something has changed. At the time, FIM couldn’t discriminate whether that change was good/bad, expected/unexpected, authorized/circumvented, malicious/non-malicious, etc… It simply told you that something changed. Unforeseen executive management changes to the first FIM technology company caused them to lose sight of the overall strategy, leading to a lack of innovation and a stagnant mindset of where and how integrity fits into a comprehensive security strategy.

Detecting change doesn’t give you integrity…it’s the collective functionality surrounding the trigger of identifying change that enables you to achieve a trusted state of operation.

Blacklist (Disallow) Was a Foundation of Failure

The security industry was predominantly built on technologies that continuously tried to manage from a state of “bad.” It was reactive by the very nature of how it could identify malicious activity. Malware would be created by bad actors and then the anti-virus companies (Symantec, McAfee, Trend Micro, and others) would need to identify that something was malicious. They would then create a program to inoculate it and then push out an update to all its subscribed users to try and block it. This process has been and always will be reactive in nature and, more importantly, unable to detect zero-day attacks.

What needed to happen was a fundamental mindset shift from trying to identify bad or unknown change to one managed from a good state. Knowing all the good and expected change(s) then highlights the change that is either a circumvented process or malicious. File reputation services (denylisting) would become just one of many data points, not the primary decision point in determining if a file/change was good or bad.

.png?width=686&height=368&name=FIM%20NEXT%20GEN%20FIM%20(1).png)

.png?width=674&height=349&name=NEXT%20GEN%20FIM%20UNKNOWN%20(1).png)

Next-Gen FIM... What Does It Look Like?

Security isn’t a product. It’s a process. It’s a process that utilizes several best practice controls, all assembled into a workflow that can answer the question…is the observed change(s) expected, and if so, what was the business process used to authorize? Furthermore, if the change(s) were unauthorized, (circumvented or malicious, as previously discussed), how can those changes be removed and rolled back?

91% of all security breaches at the workload layer can be auto-detected when three controls are deployed across an infrastructure.

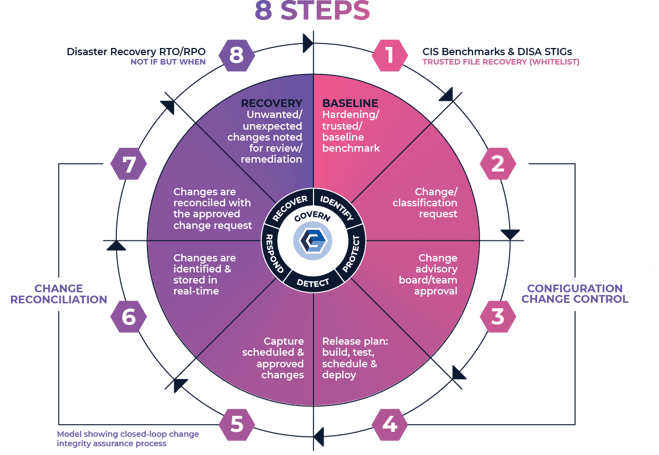

Next-Gen FIM is fundamentally made up of configuration management, change controls, and release management controls. Those controls also encompass sub-controls and processes that provide more discrete functionality to determine if the change was expected and authorized. So, what do those controls and sub-controls look like?

- System Hardening & Trusted Benchmarks - Validate and verify that your infrastructure is hardened and secure by utilizing CIS Benchmarks or DISA STIGs as your root of trust.

- Configuration Management - The management and control of configurations and baselines to minimize integrity drift and facilitate a comprehensive risk profile.

- Change Control - The process of regulating and approving changes throughout the entire operational life cycle of an information system.

- Change & Work Order Reconciliation/Curation - Highlight and compare observed changes against expected and authorized changes to create a closed-loop change control process.

- Roll-Back and Remediation – Restore to a last known trusted state of operation as measured by a baseline, NOT through reprovisioning.

- Change Prevention - Prevent changes entirely for those files and directories that should never change, avoiding the start of a security breach or operational problem.

- File Allowlisting – Leverage a database of known and trusted files through hashing techniques (known as fingerprints or signatures) that provides meta-level data to validate and verify the integrity and authenticity of file(s).

- File Reputation Services – Leverage a database of malware and signatures that can be used to identify and block malicious and dangerous files from execution, as these too are based on hashing algorithms.

- STIX & TAXII Feeds – Analyze and evaluate real-time security decisions, and vulnerability risks with continuous streams of threat intelligence feeds, as these too, utilize hashing algorithms.

These controls and sub-controls are what determine if a change is expected or unexpected. When implementing this methodology, you achieve two distinct value propositions:

- Detect zero-day attacks

- Reduce change noise by more than 95%

.png?width=658&height=360&name=NEXT%20GEN%20FIM%20(1).png)

Implementation is not complex. You assemble these controls into a simple workflow that leverages a ticketing system. You now have a closed-loop process to identify unwanted and unexpected change(s), which is either a malicious and/or circumvented change… there are no other choices.

Identifying and Containing a Breach

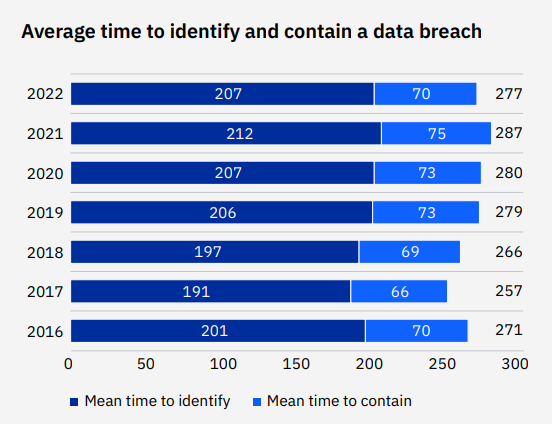

Ponemon Institute, an accredited body of independent research and education, is most widely known for its annual Cost of Data Breach published over the past seven years. And over that period, they have been tracking and reporting on two key metrics of identifying and containing breaches. Mean-time-to-identify (MTTI) and mean-time-to-contain (MTTC) are those two metrics and, for all practical purposes, an industry benchmark for how well or dire we are doing to mitigate the risk and exposure of security threats.

It takes nine months on average to identify and contain a security breach

— IBM, Cost of a Data Breach 2022

Ponemon just released its 2022 findings, and while the average time to identify and contain a data breach improved by 10 days to 207 days to identify and 70 days to contain, there is still a long way to go. This means that a bad actor can reside in your infrastructure for nearly 7 months — planning ways to compromise the integrity of an infrastructure through disruption of service, stealing intellectual property, or any other malicious activities.

Given the numerous attack vectors a bad actor can try to gain access to one’s infrastructure is becoming more and more complicated to identify. However, when a breach has occurred, the bad actor is presented with two options:

- Snoop around and try to compromise and exfiltrate sensitive information that could be used for blackmail or ransom (see Zero Trust section of this blog to discuss this topic)

- They will try to add, modify, or delete data to further compromise the integrity of the system or device.

In the case of ransomware, a bad actor needs to architect a process to deliver a malicious payload. A payload is nothing more than a piece of software being added that must then be executed to encrypt the targeted device/data. Identifying and preventing the “added” software is addressing the problem, whereas the reactive nature of trying to restore encrypted data is symptomatic of the problem.

Nevertheless, Next-Gen FIM provides the detective controls to mitigate this risk. It not only provides immediate identification when critical elements of infrastructure are changing without cause or authority but also provides a process to remediate and roll back to a trusted baseline of operation.

To help clarify this process of disaster recovery, utilizing NEXT-Gen FIM is simply restoring files that were deleted, deleting files that were added, and replacing files that were modified back to their original state, called a baseline. This is very different from provisioning, which wipes away an entire image and then begins a process of rebuilding from scratch. Reprovisioning takes time, energy, effort, and money and is often measured in hours, days, and weeks. On the other hand, restoring to a trusted baseline of operation when compromised can be measured in seconds.

45% of breaches occurred in the cloud.

— IBM, Cost of a Data Breach 2022

One of the biggest fallacies that live in the world of IT is the argument of just moving everything to the cloud and things will take care of themselves. This statement can’t be further from the truth. Ponemon results also noted that in 2022, 45% of breaches occurred in the cloud. reaches have no boundaries whether you operate in the cloud, on-prem, or hybrid.

How Does Next-Gen FIM Align with Zero Trust?

In May 2021, President Biden issued Executive Order (EO) 14028 on Improving the Nation’s Cybersecurity in response to a series of high-profile attacks targeting US Federal Agencies as well as technology vendors. The EO focused on expanding several cybersecurity capabilities for government agencies—most notably, mandating a shift toward Zero Trust principals. Now, these principles aren’t anything new. They are the rearrangement of existing best practice controls (i.e., NIST 800-53) to meet the objective and definition of a Zero Trust Architecture (ZTA). The primary effort was to protect the intellectual property of sensitive information giving access to the right people to the right assets at the right time. In August 2020, NIST released Special Publication 800-207, Zero Trust Architecture.

So, where and how does Next-Gen FIM align with ZT/ZTA? The best reference point would be the NIST 800-207 Zero Trust document that highlights and articulates the seven considerations for a Zero Trust Architecture. Tenet #5 specifically calls out the monitoring and measurement of the integrity of all owned and associated assets. While most think that Zero Trust simply focuses on Identity and Access…they are severely uninformed in that Zero Trust also includes a workload component. Think of the ramifications if a ransomware attack hit a ZTA. Yes, you can encrypt the encrypted and then you find yourself back at square one with business interruption and users/customers having problems gaining access to their data.

Next-Gen FIM and Compliance

Integrity and compliance go hand in hand. Compliance is the checks and balances that IT has adopted the necessary controls to mitigate the risk of breaches and other security events. Compliance not only provides the confidence that the correct controls are in place but also provides assurances that those controls are operating as expected. Interestingly enough, if you take the controls described in Next-Gen FIM…What Does It Look Like?, they crosswalk on average to approximately 30% of all best practices and compliance mandates. Crosswalk means they provide a control, automated scan, or enable a process, procedure, or policy to assist with the evidence collection to meet the objective of a defined domain, category, control, standard, component, or assessment factor.

In the case of the Center for Internet Security (CIS) Controls, they have established a security best practice that’s defined 18 domains or categories that include a total of 153 controls or what they call safeguards. These total controls are then prioritized into groups (IG1, IG2, and IG3) that prescribe what controls must be considered first, second, and then third. Implementation Group 1 (IG1) is comprised of the most basic and foundation controls an organization MUST implement to detect, protect, respond, and recover against most security threats. Ironically, 20 of the 56 safeguards in IG1 are integrity controls.

To learn more about CIS Controls check out: CIS Controls: What Are They and Should You Use Them?

Next-Gen FIM and DevOps

The final question remains, is there a value proposition for Next-Gen FIM to support either or both Development and Operations? The answer is an unequivocal - yes. Integrity functionality as described in Next-Gen FIM…What Does It Look Like? has a unique capability of providing significant value to both.

IT Operations is simply managing and delivering a comprehensive set of IT services to customers, whether those customers are internal or external to the organization. This encompasses both processes and activities to design, build, test, schedule, deploy, and support IT Services. One of the biggest similarities is the measurement of Mean-time-to-detect (MTTD) and Mean-time-to-resolve/repair (MTTR). These two have a direct correlation to security’s MTTI and MTTC, as discussed earlier. And to no surprise, integrity controls are used in the same manner to mitigate and reduce MTTD and MTTR. These two operational metrics are also known as Restore Time Objective (RTO) and Restore Point Objective (RPO), with an overall goal and objective to maintain service availability.

Development, while not directly related to integrity management functionality perse, has a significant value proposition when discussing Software Supply Chain Security issues. Software supply chain issues have been making headlines as it relates to SolarWinds, Log4j, and many other software vendors. A software supply chain attack occurs when a bad actor infiltrates a software vendor's development process to employ malicious code before its official release. The actions of a bad actor are mostly contained to one of four types of compromises:

- Developer is a bad actor. This is the most obvious and hard-to-combat scenario. If a bad actor has direct access to the development process and environment, they will have many opportunities to inject malicious code.

- Compromise of open-source code. Many developers use open-source code libraries—sections of pre-written code— as ‘building blocks’ to add common functionality to their code. Unfortunately, it has become common practice for bad actors to inject malicious code into popular open-source projects and distribute them via fake websites. Since open-source code is routinely built into commercial software, this can lead to serious vulnerabilities in popular software products. Worse, customers typically don’t know products contain open-source code, so they are unable to act if it later arises that the code includes vulnerabilities.

- Hijack of software updates. Software updates are essential to fix bugs, add new features, and resolve security weaknesses. Customers typically download software updates from a central server owned by the software vendor. By hijacking this infrastructure, bad actors can inject malicious code into legitimate software updates, which are trusted implicitly and installed by customers.

- Undermining codesigning. Software vendors use codesigning to verify the source, authenticity, and integrity of software downloads and updates. Bad actors can subvert codesigning by self-signing certificates, abusing signing systems, or exploiting account access controls. This enables them to disguise malicious software as legitimate software or updates, often including expected legitimate functionality to avoid arousing suspicion implicitly installed by customers.

Once software hits the CI/DC pipeline and is installed in customer locations, bad actors have the means to execute nefarious and criminal actions. With the presence of a bad actor living within an infrastructure unnoticed, Next-Gen FIM becomes a leading indicator that a software supply chain issue exists.

Next-Gen FIM Summary

Having been a part of early best practices on both the security and operations side of the equation, I am frustrated and amused at the fact that +85% of all controls in every major framework are the same. They simply have different verbiage and terminology to define a common control requirement.

The problem I have experienced is that most IT professionals still think all FIM tools only provide the functionality of detecting change against a baseline which cannot be further from the truth. When understanding the functionality of Next-Gen FIM and the broad application and value added to DevSecOps and compliance, unparalleled value can be unleashed.

Security

- Identify and prevent malicious change(s) on critical systems in REAL-TIME!

- Easily remediate unwanted change(s) and roll-back in seconds

- Detect and contain breaches immediately rather than the industry average of 277 days

- Alerts that matter - 95%+ noise reduction

- Zero Day Detection - identify the 550k/day of malicious code variants that the anti-virus vendors do not recognize

Compliance

- Always be audit-ready through an ability to provide continuous evidence

- Easily fix compliance failures with step-by-step instructions to correct failed compliance requirements

- Hardened systems using industry-recognized configuration benchmarks

- Gain visibility when systems drift away from a hardened state

- Drastically reduce audit costs

Operations

- Identify and prevent circumvented processes and change(s) on critical systems in REAL-TIME!

- Easily remediate unexpected change(s) and roll-back in seconds

- Alerts that matter - 95%+ “change noise” reduction

- Evidence and audit trail of authorized changes and work orders completed as expected

Development

- Leading indicator that there is a software supply chain security issue or vulnerability

To learn more about successfully using file integrity monitoring to its full potential, download our free guide!

June 20, 2023